Originating author is Christiane Rousseau.

Originating author is Christiane Rousseau.

From its very beginning, Google became “the” search engine. This comes from the supremacy of its ranking algorithm: the PageRank algorithm. Indeed, with the enormous quantity of pages on the World-Wide-Web, many searches end up with thousands or millions of results. If these are not properly ordered, then the search may not be of any help, since no one can explore millions of entries.

How does the PageRank algorithm work?

http://www.mathunion.org/icmi/other-activities/klein-project/introduction/

rather than

http://www.kleinproject.org/

will appear first in a search for Klein project.

To explain the algorithm, we model the web as an oriented graph. The vertices are the pages, and the oriented edges are the links between pages. As we just explained, each page corresponds to a different url. Hence, a website may contain many pages. The model makes no difference between the individual pages of a website and its front page. But, most likely, the algorithm will rank better the front page of an important website.

A simple example

Let us look at the simple web on the left with five pages named

Let us look at the simple web on the left with five pages named ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and

, and ![]() . This web has few links. If we are on page

. This web has few links. If we are on page ![]() , then there is only one link to page

, then there is only one link to page ![]() , while, if we are on page

, while, if we are on page ![]() , we find three links, and we can choose to move to either page

, we find three links, and we can choose to move to either page ![]() , or

, or ![]() , or

, or ![]() . Notice that there is at least one link from each page.

. Notice that there is at least one link from each page.

We play a game, which is simply a random walk on the oriented graph. Starting from a page, at each step we choose at random a link from the page where we are, and we follow it. For instance, in our example, if we start on page ![]() , then we can go to

, then we can go to ![]() or to

or to ![]() with probability

with probability ![]() for each case while, if we start on

for each case while, if we start on ![]() , then we necessarily go to

, then we necessarily go to ![]() with probability

with probability ![]() . We iterate the game.

. We iterate the game.

![Rendered by QuickLaTeX.com \[P=\begin{matrix} \begin{matrix} A & B & C & D & E \end{matrix} & \\ \left(\ \ \begin{matrix} 0 & \frac12 & \frac13 & 1 & 0 \\ 1 & 0 & \frac13 & 0 & \frac13 \\ 0 & \frac12 & 0 & 0 & \frac13 \\ 0 & 0 & 0 & 0 & \frac13 \\ 0 & 0 & \frac13 & 0 & 0 \end{matrix} \ \ \right) & \begin{matrix} A \\ B\\ C\\ D\\ E\end{matrix} \end{matrix}\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-042ffb6838118a58c7d9602fe9856d0b_l3.png)

Let us remark that the sum of entries of any column of ![]() is equal to

is equal to ![]() and that all entries are greater or equal to zero. Matrices having these two properties are very special: each such matrix is the matrix of a Markov chain process, also called Markov transition matrix. It always has

and that all entries are greater or equal to zero. Matrices having these two properties are very special: each such matrix is the matrix of a Markov chain process, also called Markov transition matrix. It always has ![]() as an eigenvalue and there exists an eigenvector with eigenvalue

as an eigenvalue and there exists an eigenvector with eigenvalue ![]() , all the components of which are less than or equal to

, all the components of which are less than or equal to ![]() and greater than or equal to

and greater than or equal to ![]() , with sum equal to

, with sum equal to ![]() . But, before recalling the definitions of eigenvalue and eigenvector, let us explore the advantage of the matrix representation of the web graph.

. But, before recalling the definitions of eigenvalue and eigenvector, let us explore the advantage of the matrix representation of the web graph.

We consider a random variable ![]() with values in the set of pages

with values in the set of pages ![]() , which contains

, which contains ![]() pages (here

pages (here ![]() ).

). ![]() represents the page where we are after

represents the page where we are after ![]() steps of the random walk. Hence, if we call

steps of the random walk. Hence, if we call ![]() the entry of the matrix

the entry of the matrix ![]() located on the

located on the ![]() -th row and

-th row and ![]() -th column, then

-th column, then ![]() is the conditional probability that we are on the

is the conditional probability that we are on the ![]() -th page at step

-th page at step ![]() , given that we were on the

, given that we were on the ![]() -th page at step

-th page at step ![]() :

:

![]()

Note that this probability is independent of ![]() ! We say that a Markov chain process has no memory of the past. It is not difficult to figure that the probabilities after two steps can be summarized in the matrix

! We say that a Markov chain process has no memory of the past. It is not difficult to figure that the probabilities after two steps can be summarized in the matrix ![]() .

.

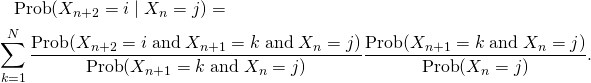

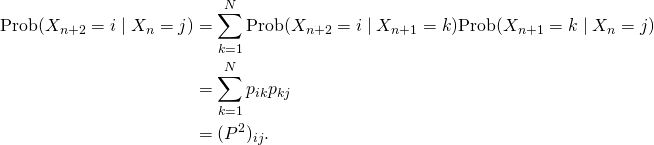

Let us prove it (you can skip the proof if you prefer). The theorem of total probabilities yields

![Rendered by QuickLaTeX.com \[\text{Prob}(X_{n+2}=i \mid X_n=j)=\sum_{k=1}^N\text{Prob}(X_{n+2}= i \;\text{and}\; X_{n+1}=k \mid X_n=j).\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-d218b844918839f2cc29f13a5c22a02d_l3.png)

By definition of conditional probability

![Rendered by QuickLaTeX.com \[\text{Prob}(X_{n+2}=i \mid X_n=j)=\sum_{k=1}^N\frac{\text{Prob}(X_{n+2}= i \;\text{and}\; X_{n+1}=k \;\text{and}\; X_n=j)} {\text{Prob}(X_n=j)}.\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-5106f79e05a5ce44aba08836606f60c1_l3.png)

We use a familiar trick: multiply and divide by the same quantity:

The first quotient is equal to

![]()

since a Markov chain process has no memory past the previous

step. Hence,

In our example

![Rendered by QuickLaTeX.com \[P^2=\begin{matrix} \begin{matrix} A & B & C & D & E \end{matrix} & \\ \left(\ \ \begin{matrix}\frac12 & \frac16 & \frac16 & 0 & \frac{11}{18} \\ 0 & \frac23 & \frac49 & 1 & \frac19 \\ \frac12 & 0 & \frac5{18} & 0 & \frac16 \\ 0 & 0 & \frac19 & 0 & 0 \\ 0 & \frac16 & 0 & 0 & \frac19 \end{matrix} \ \ \right) & \begin{matrix} A \\ B\\ C\\ D\\ E\end{matrix} \end{matrix}\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-c815aee0d8d73d9494ae3dcdbf160507_l3.png)

Iterating this idea, it is clear that the entry ![]() , of the matrix

, of the matrix ![]() describes the probability

describes the probability ![]() . For instance,

. For instance,

![Rendered by QuickLaTeX.com \[P^{32} =\begin{matrix} \begin{matrix} \:\:A \quad& \:\:B\quad & \:\:C \quad& \:\:D\quad &\:\: E \quad\end{matrix} & \\ \left(\ \ \begin{matrix} 0.293 & 0.293 & 0.293 & 0.293 & 0.293 \\ 0.390 & 0.390 & 0.390 & 0.390 & 0.390 \\ 0.220 & 0.220 & 0.220 & 0.220 & 0.220 \\ 0.024 & 0.024 & 0.024 & 0.024 & 0.024 \\ 0.073 & 0.073 & 0.073 & 0.073 & 0.073 \end{matrix} \ \ \right) & \begin{matrix} A \\ B\\ C\\ D\\ E\end{matrix}\end{matrix}\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-de62ad1480800013e6d29802e633ec19_l3.png)

All columns of ![]() are identical if we choose precision to 3 decimals, and the same as the columns of

are identical if we choose precision to 3 decimals, and the same as the columns of ![]() when

when ![]() . If we choose a higher precision, we also find stabilization, but for

. If we choose a higher precision, we also find stabilization, but for ![]() larger than

larger than ![]() . Hence, after

. Hence, after ![]() steps, where

steps, where ![]() is sufficiently large, the probability of being on a page is independent from where we started!

is sufficiently large, the probability of being on a page is independent from where we started!

Moreover, let us consider the vector

![]()

(![]() is a vertical vector, and its transpose

is a vertical vector, and its transpose ![]() is a horizontal vector). It is easily checked that

is a horizontal vector). It is easily checked that ![]() . If we consider the

. If we consider the ![]() -th coordinate of the vector

-th coordinate of the vector ![]() as the probability of being on page

as the probability of being on page ![]() at a given time

at a given time ![]() , and hence

, and hence ![]() as the probability distribution of pages at time

as the probability distribution of pages at time ![]() , then it is also the probability distribution at time

, then it is also the probability distribution at time ![]() . For this reason, the vector

. For this reason, the vector ![]() is called the stationary distribution. This stationary distribution allows to order the pages. In our example, we order the pages as

is called the stationary distribution. This stationary distribution allows to order the pages. In our example, we order the pages as ![]() ,

, ![]() ,

, ![]() ,

, ![]() ,

, ![]() , and we declare

, and we declare ![]() the most important page.

the most important page.

The general case

The general case can be treated exactly as our example. We represent the web as an oriented graph in which the ![]() vertices represent the

vertices represent the ![]() pages of the web, and the oriented edges represent the links between pages. We summarize the graph in an

pages of the web, and the oriented edges represent the links between pages. We summarize the graph in an ![]() matrix,

matrix, ![]() , with the

, with the ![]() -column representing the

-column representing the ![]() -th departing page and the

-th departing page and the ![]() -row, the

-row, the ![]() th arrival page. In our example we found a vector

th arrival page. In our example we found a vector ![]() satisfying

satisfying ![]() . This vector is an eigenvector of the eigenvalue

. This vector is an eigenvector of the eigenvalue ![]() . Let us recall the definition of eigenvalue and eigenvector.

. Let us recall the definition of eigenvalue and eigenvector.

Definition. Let ![]() be an

be an ![]() matrix. A number

matrix. A number ![]() is an eigenvalue of

is an eigenvalue of ![]() if there exists a nonzero vector

if there exists a nonzero vector ![]() such that

such that ![]() . Any such vector

. Any such vector ![]() is called an eigenvector of

is called an eigenvector of ![]() .

.

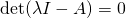

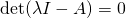

We also recall the method to find the eigenvalues and eigenvectors.

Proposition Let ![]() be an

be an ![]() matrix. The eigenvalues of

matrix. The eigenvalues of ![]() are the roots of the characteristic polynomial

are the roots of the characteristic polynomial ![]() , where

, where ![]() is the

is the ![]() identity matrix The eigenvectors of an eigenvalue

identity matrix The eigenvectors of an eigenvalue ![]() are the nonzero solutions of the linear homogeneous system

are the nonzero solutions of the linear homogeneous system ![]() .

.

The following deep theorem of Frobenius guarantees that for the matrix associated to a web graph, we will always find a stationary solution.

Theorem (Frobenius theorem) We consider an ![]() Markov transition matrix

Markov transition matrix ![]() (i.e.

(i.e. ![]() for all

for all ![]() , and the sum of entries on each column is equal to

, and the sum of entries on each column is equal to ![]() , namely

, namely ![]() ). Then

). Then

is one eigenvalue of

is one eigenvalue of  .

.- Any eigenvalue

of

of  satisfies

satisfies  .

. - There exists an eigenvector

of the eigenvalue

of the eigenvalue  , all the coordinates of which are greater

, all the coordinates of which are greater

or equal than zero. Without loss of generality we can suppose that

the sum of its coordinates be .

.

It is now time to admire the power of this theorem. For this purpose, we will make the simplifying hypothesis that the matrix ![]() has a basis of eigenvectors

has a basis of eigenvectors ![]() and we suppose that

and we suppose that ![]() is the vector

is the vector ![]() of Frobenius theorem. For each

of Frobenius theorem. For each ![]() , there exists

, there exists ![]() such that

such that ![]() . Let us take any nonzero vector

. Let us take any nonzero vector ![]() with

with ![]() , where

, where ![]() and

and ![]() . We decompose

. We decompose ![]() in the basis

in the basis ![]() :

:

![Rendered by QuickLaTeX.com \[X=\sum_{i=1}^N a_iv_i.\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-75c52ea84c4a0aa148f3aa2977bdb8be_l3.png)

A technical proof that we skip allows to prove that ![]() . Now, let us calculate

. Now, let us calculate ![]() :

:

![Rendered by QuickLaTeX.com \[PX= \sum_{i=1}^Na_i Pv_i= \sum_{i=1}^n a_i\lambda_iv_i,\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-3f5f58d838bdce8623400f20b0c3eb55_l3.png)

since ![]() is an eigenvector of the eigenvalue

is an eigenvector of the eigenvalue ![]() . And if we iterate we get

. And if we iterate we get

![Rendered by QuickLaTeX.com \[P^nX= \sum_{i=1}^n a_i\lambda_i^nv_i.\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-42c385cba649519613da2a81cae3b1bb_l3.png)

If all ![]() for

for ![]() satisfy

satisfy ![]() , then

, then

![]()

This is exactly what happens in our example!

Notice however that the theorem does not guarantee that any matrix ![]() satisfying the hypotheses of the theorem will have this property. Let us describe the possible pathologies and the remedy.

satisfying the hypotheses of the theorem will have this property. Let us describe the possible pathologies and the remedy.

Possible pathologies.

- The eigenvalue

may be a multiple root of the characteristic polynomial

may be a multiple root of the characteristic polynomial  .

. - The matrix

may have other eigenvalues

may have other eigenvalues  than

than  , with modulus equal to

, with modulus equal to  .

.

What do we do in this case?

We call a Markov transition matrix with no pathology a regular Markov transition matrix, namely

Definition. A Markov transition matrix is regular if

- The eigenvalue

is a simple root of the characteristic polynomial

is a simple root of the characteristic polynomial  .

. - All other eigenvalues

of

of  than

than  have modulus less than

have modulus less than  .Remark that most matrices

.Remark that most matrices  are regular. So, if the Markov transtion matrix of a web is not regular, then the strategy is to deform it slightly to a regular one.

are regular. So, if the Markov transtion matrix of a web is not regular, then the strategy is to deform it slightly to a regular one.

Remedy. We consider the ![]() matrix

matrix ![]() , with

, with ![]() for all

for all ![]() . We replace the matrix

. We replace the matrix ![]() of the web by the matrix

of the web by the matrix

(1) ![]()

for some small ![]() . (The value

. (The value ![]() has been used by Google.) Notice that the matrix

has been used by Google.) Notice that the matrix ![]() still has nonnegative entries and that the sum of the entries of each column is still equal to

still has nonnegative entries and that the sum of the entries of each column is still equal to ![]() , so it is still a Markov transition matrix. The following theorem guarantees that there exists some small

, so it is still a Markov transition matrix. The following theorem guarantees that there exists some small ![]() for which we have fixed the pathologies.

for which we have fixed the pathologies.

Theorem Given any Markov transition matrix ![]() , there always exists a positive

, there always exists a positive ![]() , as small as we wish, such that the matrix

, as small as we wish, such that the matrix ![]() is regular. Let

is regular. Let ![]() be the eigenvector of

be the eigenvector of ![]() for the matrix

for the matrix ![]() , normalized so that the sum of its coordinates be

, normalized so that the sum of its coordinates be ![]() . For the matrix

. For the matrix ![]() , given any nonzero vector

, given any nonzero vector ![]() , where

, where ![]() with

with ![]() and

and ![]() , then

, then

(2) ![]()

Link with the Banach fixed point theorem

Another (coming) vignette treats the Banach fixed point theorem. The theorem above can be seen as a particular application of it. You can skip this section if you have not read the other vignette. Indeed,

Theorem Let ![]() be a regular Markov transition matrix. We consider

be a regular Markov transition matrix. We consider ![]() , with an adequate distance

, with an adequate distance ![]() between points (this distance depends on

between points (this distance depends on ![]() ). On

). On ![]() , we consider the linear operator

, we consider the linear operator ![]() defined by

defined by ![]() . The operator

. The operator ![]() is a contraction on

is a contraction on ![]() , namely there exists

, namely there exists ![]() such that for all

such that for all ![]() ,

,

![]()

Then, there exists a unique vector ![]() such that

such that ![]() .

.

Moreover, given any ![]() , we can define the sequence

, we can define the sequence ![]() by induction, where

by induction, where ![]() . Then,

. Then, ![]() .

.

Definition of the distance ![]() . The definition of the distance

. The definition of the distance ![]() is a bit subtle and can be skipped. We include it for the sake of completeness for the reader who wants to push far the details. We limit ourselves to the case where the matrix

is a bit subtle and can be skipped. We include it for the sake of completeness for the reader who wants to push far the details. We limit ourselves to the case where the matrix ![]() is diagonalizable. Let

is diagonalizable. Let ![]() be a basis of eigenvectors. Vectors

be a basis of eigenvectors. Vectors ![]() can be written in the basis

can be written in the basis ![]() :

:

![Rendered by QuickLaTeX.com \[X=\sum_{i=1}^N a_iv_i, \quad Y=\sum_{i=1}^N b_iv_i,\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-e4878c51bc88f673f3e86656255bb8ef_l3.png)

where ![]() . Then we define

. Then we define

![Rendered by QuickLaTeX.com \[d(X,Y)=\sum_{i=1}^n |a_i-b_i|.\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-ca97409e4ea4eb245c8b0400d97b8332_l3.png)

With this distance, ![]() is a complete metric space, i.e. all Cauchy sequences converge.

is a complete metric space, i.e. all Cauchy sequences converge.

This theorem not only guarantees the existence of ![]() , but gives a method to construct it, as the limit of the sequence

, but gives a method to construct it, as the limit of the sequence ![]() . We have seen an illustration of this convergence in our example. Indeed, the

. We have seen an illustration of this convergence in our example. Indeed, the ![]() -th column of

-th column of ![]() is the vector

is the vector ![]() , where

, where ![]() is the

is the ![]() -th vector of the canonical basis. Of course, in our example, we could also have found directly the vector

-th vector of the canonical basis. Of course, in our example, we could also have found directly the vector ![]() by solving the system

by solving the system ![]() with matrix

with matrix

![Rendered by QuickLaTeX.com \[I-P= \left(\begin{matrix} 1 &- \frac12 & -\frac13 & -1 & 0 \\ -1 & 1 & -\frac13 & 0 & -\frac13 \\ 0 & -\frac12 & 1 & 0 & -\frac13 \\ 0 & 0 & 0 & 1 & -\frac13 \\ 0 & 0 & -\frac13 & 0 & 1 \end{matrix}\right).\]](https://blog.kleinproject.org/wp-content/ql-cache/quicklatex.com-05e0ba5931bfe376391bdb5e783a74b2_l3.png)

We would have found that all solutions are of the form ![]() for

for ![]() . The solution whose sum of coordinates is

. The solution whose sum of coordinates is ![]() is hence

is hence ![]() , where

, where

![]()

Practical calculation of the stationary distribution

We have identified the simple idea underlying the algorithm. However finding the stationary distribution ![]() , i.e. an eigenvector of the eigenvalue

, i.e. an eigenvector of the eigenvalue ![]() for the matrix

for the matrix ![]() in (1), is not at all a trivial task when the matrix has billions of lines and columns: both the time of the computation and the memory space to achieve it represent real challenges. The usual method of Gauss elimination is useless in this case, both because of the size of the computation, and because it requires to divide by small coefficients. A more efficient algorithm makes use of the property (2) (see [LM]). This makes the link with the coming vignette on the Banach fixed point theorem which will enlighten that the proof of Banach fixed point theorem provides an algorithm to construct the fixed point.

in (1), is not at all a trivial task when the matrix has billions of lines and columns: both the time of the computation and the memory space to achieve it represent real challenges. The usual method of Gauss elimination is useless in this case, both because of the size of the computation, and because it requires to divide by small coefficients. A more efficient algorithm makes use of the property (2) (see [LM]). This makes the link with the coming vignette on the Banach fixed point theorem which will enlighten that the proof of Banach fixed point theorem provides an algorithm to construct the fixed point.

Indeed, we start with ![]() such that

such that

![]()

and we need to calculate ![]() . Usually,

. Usually, ![]() for some

for some ![]() between

between ![]() and

and ![]() gives a pretty good approximation of

gives a pretty good approximation of ![]() . By induction, we calculate

. By induction, we calculate ![]() . Even such calculations are quite long. Indeed, due to its construction, the matrix

. Even such calculations are quite long. Indeed, due to its construction, the matrix ![]() in (1) has no zero entries. On the other hand, most of the entries of the matrix

in (1) has no zero entries. On the other hand, most of the entries of the matrix ![]() are zero. So, we must decompose the computation to take advantage of this fact, namely

are zero. So, we must decompose the computation to take advantage of this fact, namely

![]()

Because of the special form of ![]() , it is easy to verify that if

, it is easy to verify that if ![]() is a vector the sum of entries of which is

is a vector the sum of entries of which is ![]() , then

, then ![]() . Hence, it suffices to calculate the sequence

. Hence, it suffices to calculate the sequence

![]()

Conclusion

We have presented the public part of Google’s PageRank algorithm. Already you can experiment with simple webs and find the tricks to improve the ranking of your personal page by adding internal and external links in an optimal way. Some private, more sophisticated parts continue to be developed. Some of them consist in replacing the ” neutral” matrix ![]() of (1) by matrices reflecting the taste of the surfer on the web. Others ensure that the ranking is not too sensitive to the manipulations of those who try to improve the ranking of their pages.

of (1) by matrices reflecting the taste of the surfer on the web. Others ensure that the ranking is not too sensitive to the manipulations of those who try to improve the ranking of their pages.

As general conclusion, what have we observed? A simple, clever idea has led to a immense breakthrough in the efficiency of search engines, and to the birth of a commercial empire. Even if the implementation is in itself a computational exploit, the initial idea required ” elementary” mathematics, namely linear algebra and probability theory. These relatively standard mathematical tools, in particular the diagonalization of matrices, have given their full power when they have been used outside of their “normal” context. Also, we have highlighted the unifying ideas inside science, with Banach fixed point theorem having applications so remote from its origin.

Bibliography

[E] M. Eisermann, Comment Google classe les pages webb, http://images.math.cnrs.fr/Comment-Google-classe-les-pages.html, 2009.

[LM] A. N. Langville and C. D. Meyer, A Survey of Eigenvector Methods

for Web Information Retrieval, SIAM Review, Volume 47, Issue 1,

(2005), pp. 135–161.

[RS] C. Rousseau and Y. Saint-Aubin, Mathematics and

technology, SUMAT Series, Springer-Verlag, 2008 (A French version of the book exists, published in the same series.)

Thanks for this piece, but I am still lacking the connection between google’s algorithm and the analysis above. Does the stationary distribution define the web-page’s ranking in a search?

How markov chain apply in pagerank ?

The next challenge is to expose high school students to this and other articles on Klein project blog website. A study dealing with such a challenge is on going in Israel in the past 2 years based upon a preliminary presentation at ESU5 (Movshovitz-Hadar 2007), and an action research presented at ESU6 (Amit and Movshovitz-Hadar 2011). We develop short, single-topic PowerPoint presentations named Math-News-Snapshots to be interwoven in the curriculum once a fortnight so that progress in the mandatory curriculum is not harmed yet students get the sense of what’s happening in contemporary mathematics. We will be glad to share more information about the study. Please send personal e-mail to: nitsa at technion dot ac dot il

After I initially commented I seem to have clicked the -Notify me when new comments are added- checkbox annd now ewch

time a comment is added I recieve 4 emails with the same comment.

Is there a waay you aare able to remove me from that service?

Appreciate it!